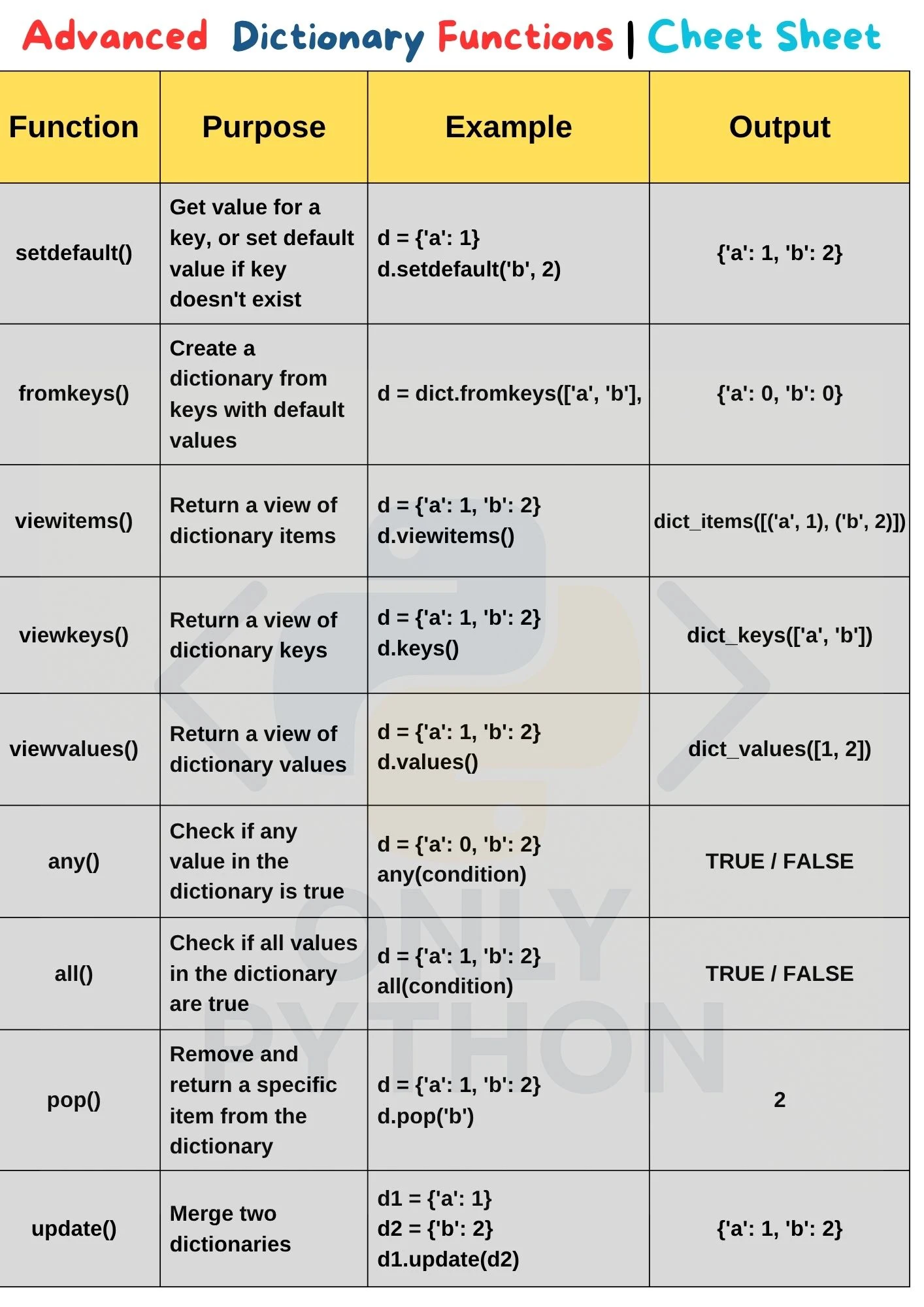

Python: Advanced Dictionary Functions Cheat Sheet with Examples

1. setdefault() Method

The setdefault() method returns the value of a specified key. If the key does not exist, it inserts the key with the specified default value and returns that value.

Example 1: Existing key

student = {'name': 'Liam', 'age': 24}

# Key 'name' exists, returns value

print(student.setdefault('name', 'Unknown')) # Liam

Output:

Liam

Example 2: Non-existing key

# Key 'grade' does not exist, so it's added with default 'A'

print(student.setdefault('grade', 'A'))

print(student)

Output:

A

{'name': 'Liam', 'age': 24, 'grade': 'A'}

Example 3: Using setdefault() without default value

# Adds key with default value None

print(student.setdefault('department'))

print(student)

Output:

None

{'name': 'Liam', 'age': 24, 'grade': 'A', 'department': None}

2. fromkeys() Method

The fromkeys() method creates a new dictionary from a sequence of keys, setting all values to a given value (default is None).

Example 1: With a specified default value

keys = ['name', 'age', 'course']

default_value = 'Unknown'

new_dict = dict.fromkeys(keys, default_value)

print(new_dict)

Output:

{'name': 'Unknown', 'age': 'Unknown', 'course': 'Unknown'}

Example 2: Without specifying a default value

new_dict_none = dict.fromkeys(keys)

print(new_dict_none)

Output:

{'name': None, 'age': None, 'course': None}

⚠️ Important note:

Using a mutable object as a default value will link all keys to the same object. For example:

keys = ['a', 'b', 'c']

new_dict = dict.fromkeys(keys, [])

new_dict['a'].append(1)

print(new_dict)

Output:

{'a': [1], 'b': [1], 'c': [1]}

This happens because the same list object is shared by all keys.

3. items() Method (View Items)

The items() method returns a view object of the dictionary’s key-value pairs as tuples.

Example 1: Viewing items

student = {'name': 'Mia', 'age': 22, 'course': 'Economics'}

items_view = student.items()

print(items_view)

Output:

dict_items([('name', 'Mia'), ('age', 22), ('course', 'Economics')])

Example 2: Converting items to list

items_list = list(items_view)

print(items_list)

Output:

[('name', 'Mia'), ('age', 22), ('course', 'Economics')]

Example 3: Iterating through items

for key, value in items_view:

print(f"{key} => {value}")

Output:

name => Mia

age => 22

course => Economics

4. keys() Method (View Keys)

The keys() method returns a view object containing all keys in the dictionary.

Example 1: Viewing keys

student = {'name': 'Noah', 'age': 23, 'course': 'Physics'}

keys_view = student.keys()

print(keys_view)

Output:

dict_keys(['name', 'age', 'course'])

Example 2: Converting keys to list

keys_list = list(keys_view)

print(keys_list)

Output:

['name', 'age', 'course']

Example 3: Checking key existence

print('age' in keys_view) # True

print('grade' in keys_view) # False

5. values() Method (View Values)

The values() method returns a view object containing all the values in the dictionary.

Example 1: Viewing values

student = {'name': 'Noah', 'age': 23, 'course': 'Physics'}

values_view = student.values()

print(values_view)

Output:

dict_values(['Noah', 23, 'Physics'])

Example 2: Converting values to list

values_list = list(values_view)

print(values_list)

Output:

['Noah', 23, 'Physics']

Example 3: Checking value existence

print('Physics' in values_view) # True

print('Math' in values_view) # False

6. any() Function

The any() function returns True if at least one element of the iterable is true; otherwise, it returns False. If the iterable is empty, it returns False.

Example 1: Check if any student passed (grade > 0)

student_grades = {'Alice': 0, 'Bob': 2, 'Charlie': 0}

result = any(grade > 0 for grade in student_grades.values())

print(result)

Output:

True

Example 2: Empty dictionary

empty = {}

print(any(empty)) # False

7. all() Function

The all() function returns True if all elements in the iterable are true; otherwise, it returns False. If the iterable is empty, it returns True by definition.

Example 1: Check if all students passed (grade > 0)

student_grades = {'Alice': 1, 'Bob': 2, 'Charlie': 0}

result = all(grade > 0 for grade in student_grades.values())

print(result)

Output:

False

Example 2: Empty dictionary

empty = {}

print(all(empty)) # True

8. pop() Method

The pop() method removes the specified key from the dictionary and returns its value. If the key does not exist and a default value is not provided, it raises a KeyError.

Example 1: Pop existing key

student = {'name': 'Olivia', 'age': 21, 'course': 'Math'}

age = student.pop('age')

print(f"Removed age: {age}")

print(student)

Output:

Removed age: 21

{'name': 'Olivia', 'course': 'Math'}

Example 2: Pop non-existing key with default

grade = student.pop('grade', 'Not Assigned')

print(f"Grade: {grade}")

print(student)

Output:

Grade: Not Assigned

{'name': 'Olivia', 'course': 'Math'}

Example 3: Pop non-existing key without default (raises error)

# student.pop('grade') # Uncommenting this will raise KeyError

9. update() Method

The update() method updates the dictionary with key-value pairs from another dictionary or an iterable of key-value pairs.

Example 1: Update with another dictionary

student = {'name': 'Ethan', 'age': 22}

additional_info = {'age': 23, 'grade': 'A'}

print("Before update:", student)

student.update(additional_info)

print("After update:", student)

Output:

Before update: {'name': 'Ethan', 'age': 22}

After update: {'name': 'Ethan', 'age': 23, 'grade': 'A'}

Example 2: Update using iterable of tuples

student.update([('course', 'History'), ('year', 3)])

print("After second update:", student)

Output:

After second update: {'name': 'Ethan', 'age': 23, 'grade': 'A', 'course': 'History', 'year': 3}

📚 Practice Questions:

📚 Related Topics:

- ➤ Python Arithmetic Operators

- ➤ Basic String Functions

- ➤ Advanced String Functions

- ➤ Basic List Functions

- ➤ Advanced List Functions : Part-1

- ➤ Advanced List Functions : Part-2

- ➤ Basic Tuple Functions

- ➤ Advanced Tuple Functions

- ➤ Basic Dictionary Functions

- ➤ Advanced Dictionary Functions

- ➤ Conditional Statements : if-elif-else

- ➤ Python 'for' Loop

- ➤ Python 'while' Loop

- ➤ Difference between 'for' loop and 'while' loop

- ➤ Introducing Python Functions

📌 Bookmark this blog or follow for updates!

👍 Liked this post? Share it with friends or leave a comment below!